What is data validation?

Data validation is the practice of checking the integrity, accuracy and structure of data before it is used for or by one or more business operations. The results of a data validation operation can provide useful, actionable data that can then be used for data analytics or business intelligence applications, or for training machine learning models. Often, data is validated to ensure its integrity for financial accounting or regulatory compliance.

Why validate data?

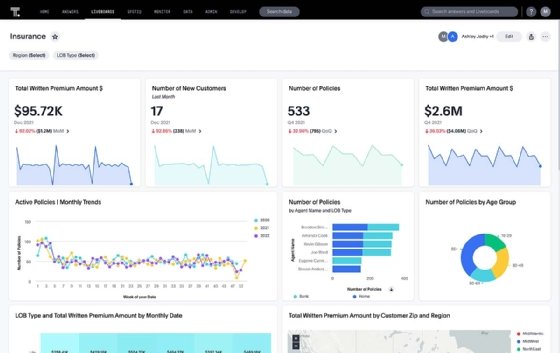

For data scientists, data analysts and others who work with data and require accurate results, data validation is a very important process. For those users, the output of the systems they use can only be as good as the data the operations are based on. These operations can include machine learning or artificial intelligence models, data analytics reports, and business intelligence dashboards.

Validating the data ensures it is accurate, which means all systems relying on a validated data set will be accurate as well.

Data validation also ensures the consistency, accuracy and completeness of data, particularly if data is being moved, or migrated, between locations or if data from different sources is being merged. As data moves from one location to another, different needs for the data arise based on how the data is being used.

Data validation ensures the data is correct for specific contexts. The right type of data validation makes the data useful for an organization or for a specific application operation. Ensuring the validity and meaningfulness of the data set facilitates useful analytics for a wide variety of applications. It also prevents issues related to data inconsistencies or corruption. For example, if data is not in the right format to be consumed by a specific system, then the data can't be used easily -- or at all -- since the system might not be able to read it.

Data validation is also often related to data quality. The validation process can be used to measure data quality, which ensures a given data set is supplied with information sources that are not only of the highest quality, but also authoritative and accurate. Higher-quality, validated data removes the need for data cleansing and its associated costs, which can be very high if done later in a data-driven or data-dependent process.

Finally, data is also validated as part of many business application workflows. Examples include spell checks and rule setting for the creation of strong system, account, application and website passwords. In these types of workflows, the use of automated data validation systems eliminates the need for human intervention, thus speeding up the workflow, improving output consistency and preventing errors.

What are the different types of data validation?

Data can be examined as part of a validation process in a variety of ways, including data type, constraint, structure, consistency and code validation. Each type is designed to make sure the data meets specific requirements to be useful.

- Data type validation is common and confirms that the data in each field, column, list, range or file matches a specified data type and format.

- Constraint validation checks to see if a given data field input fits a specified requirement within certain ranges. For example, it could verify that a data field has a minimum or maximum number of characters.

- Structure, or structured, validation ensures data is compliant with a specified data format, structure or schema.

- Consistency validation makes sure data styles are consistent. For example, it could confirm that all values are listed to two decimal points.

- Code validation is similar to a consistency check and confirms that codes used for different data inputs are correct. For example, it could check that a country code or North American Industry Classification System code is correct.

How to perform data validation

One basic and common way to validate data involves using a spreadsheet program such as Microsoft Excel or Google Sheets. In those applications, the data validation process is a straightforward, integrated feature. Excel and Sheets both have a menu item listed as Data > Data Validation. By selecting the feature, users can choose the specific data type or constraint validation required for a given file or data range.

Excel supports the validation of many different types of data:

- Whole numbers.

- Decimal numbers.

- Dates.

- Time.

- Text length.

Users can also insert custom formulas or get Excel to pick data from a drop-down list. In addition, they can select allowable values for the data, insert an input message that will show when a cell is selected and create a customized error alert that will show if there's an attempt to enter invalid data.

Data validation can also be done programmatically in an application context for an input value. For example, as an input variable is sent, such as a password, a script can check to make sure it meets constraint validation for the right length.

Data validation tools

Many for-a-fee and open source, or freely shared, data validation tools are available to validate and repair data sets and ensure they conform to predefined rules or standards. Some of the most popular tools according to experts include the following:

- Alteryx.

- Datameer.

- Informatica Multidomain Master Data Management.

- Oracle Cloud Infrastructure Data Catalog.

- Precisely.

- SAP Master Data Governance.

- Talend Data Catalog.

By automating some or all parts of the data validation process, these tools minimize many common challenges observed with manual validation, which include the following:

- Validation of large data sets can be time-consuming.

- Irrelevant or outdated data might remain in the data set.

- Lack of clarity regarding validation requirements.

- High failure rate.

- High risk of error.

Data validation and ETL

Extract, transform and load and data integration tools typically integrate data validation policies to be executed as data is extracted from one source and then loaded into a target, such as a data warehouse. The validation process for ETL usually includes the following steps:

- Select a data sample from the data set and define an acceptable error rate.

- Confirm that the data set contains all the required data.

- Check if the source data's values, structure and format match the destination schema.

- Identify and remove redundant, incomplete, inconsistent or incorrect values from the data set.

Popular open source tools, such as dbt -- data build tool -- also include data validation options and are commonly used for data transformation.

Data preparation is a crucial part of analytics applications, but it's complicated. Explore common challenges that can send the data prep process off track.